Voice: AI-generated courtesy of Qognitive Lab

Find out how businesses can develop their own multi-model AI Copilots, create AI-driven workflows, build advanced AI Agents and turn last-mile challenges into celebrated milestones of innovation and growth.

Explore the challenges and opportunities of integrating generative AI into business environments. Learn about our Generative AI Solution Accelerator and Catalyst Hub, which are powerful tools to help navigate this complex landscape.

The buzz around generative AI is undeniable. From boardrooms to coffee shops, everyone's caught up in its potential to revolutionise industries and redefine work. Yet, for all the excitement, the road to actual adoption in the business world is fraught with obstacles.

Let's face it, most organisations aren't greenfield sites. They're navigating a complex landscape of legacy systems, siloed processes, and often, a cautious approach to adopting new technologies. Integrating a powerful tool like generative AI into this environment isn't as simple as plugging it in and watching the magic happen.

Just as the final leg of a marathon can be the most gruelling, getting a generative AI application from prototype to production is no easy feat. It requires careful consideration, strategic planning, and a tailored approach that addresses the specific needs and limitations of each unique business context.

That's where our Generative AI Solution Accelerator comes in. We've designed this programme to bridge the gap between the potential of generative AI and its practical implementation within your organisation.

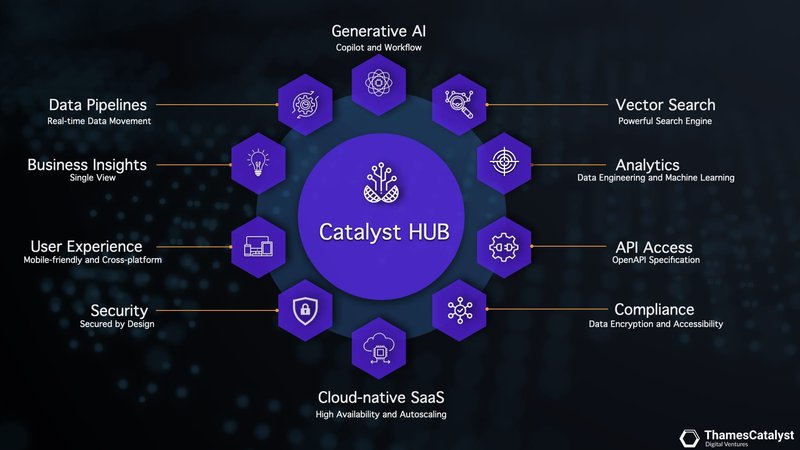

Powered by our modern data and insights platform, Catalyst Hub, the Accelerator is a scalable solution designed for bringing generative AI applications from prototype to production within a short time-frame. With leading foundation models already integrated, an intuitive multi-model Copilot interface allows you to experiment with real-world business scenarios from the outset.

In as little as six weeks, organisations can gain a clear understanding of their specific use cases, explore the possibilities based on their unique needs and develop an actionable strategy to harness the potential of generative AI.

Finding the Optimal Balance: Cost, Speed and Performance

When it comes to generative AI, getting your application out there quickly often means using a pre-trained model available through an API. However, when data security takes precedence, the appeal of privately hosted open-source models becomes evident, serving as a secure and viable point of departure.

Fortunately, the world of generative AI is brimming with innovation. We have a wealth of models at our fingertips, ready to tackle any specific use case. The key is to remain flexible and steer clear of vendor lock-in. We need robust solutions that can be powered by a variety of models, ensuring adaptability and future-proofing.

This is where our Accelerator steps in, offering an API platform that opens the door to a diverse selection of top-tier AI models. We're talking leading names like Anthropic, Mistral AI, Open AI, Stability AI and Google Vertex AI among others.

The models themselves range from a nimble 7 billion parameters to a colossal 1 trillion, with sizes evolving at breakneck speed and the models getting more powerful in both directions.

Having access to this spectrum of models is crucial. For tasks demanding top-level performance, like legal research or financial analysis, you need the heavyweights – the most powerful models built for complexity.

But sometimes, you need a different approach. When speed and targeted accuracy are paramount, a more compact model delivers near-instant responsiveness. It's clear that generative AI isn't a one-size-fits-all solution within an organisation.

Businesses need to find that perfect balance of intelligence and speed for their specific workloads, choosing from a range of foundation models. And that's precisely where our AI accelerator shines.

It simplifies the process of finding the sweet spot between cost, speed and performance. By providing access to a variety of models, businesses can evaluate the cost-performance trade-off and choose the model that offers the best value for their unique needs.

An Intuitive, Multi-Model Copilot Interface for the Enterprise

The concept of a multi-model Copilot addresses a critical concern within the AI landscape: the lack of transparency and control. While traditional Copilots offer convenience, they often operate as opaque black boxes, leaving users in the dark about the underlying models, data handling and decision-making processes.

This lack of insight can be a significant barrier to trust and adoption, especially within the enterprise environment where data security and explainability are paramount.

Our solution accelerator tackles this challenge head-on by providing an intuitive multi-model Copilot interface. Imagine a familiar chat interface, but with the added power of choosing and switching between different AI models.

This empowers users to select the most suitable model for their specific task, whether it's generating creative content, analysing complex data or translating languages.

Furthermore, our interface allows users to save system prompts, essentially creating a library of instructions for the models. This level of control ensures consistent and reliable results, eliminating the worry of unexpected outputs or "hallucinations" that can occur with black-box Copilots.

The benefits of a multi-model approach extend beyond transparency and control. Consider the concept of "model rotation" employed within our own in-house Copilot. During development, one model might be tasked with generating code, while another focuses on reviewing and refining it.

This collaborative approach mirrors the process of consulting a team of experts, each with their own strengths and specialisations. By leveraging the diverse capabilities of multiple models, we can ensure a more comprehensive and robust solution.

As users become familiar with the unique characteristics of each model, they can strategically integrate them into their workflows, optimising efficiency and effectiveness.

The multi-model Copilot, therefore, becomes more than just a tool; it evolves into a collaborative partner, empowering users to harness the full potential of AI with confidence and clarity.

Building AI-driven Workflows with Advanced Techniques

The potential of generative AI extends far beyond simple interactions. By integrating AI into existing workflows, businesses can augment human capabilities and unlock new levels of efficiency and creativity.

Our AI Accelerator and multi-model Copilot provide the tools needed to develop sophisticated AI-driven workflows using advanced techniques like prompt engineering, Retrieval Augmented Generation (RAG) and function calling.

Prompt engineering is the art of crafting queries that guide AI models to generate desired outputs. It involves understanding the nuances of how AI interprets language and tailoring prompts to increase the probability of accurate, relevant and creative responses. By mastering prompt engineering, businesses can refine their AI-driven workflows to achieve results that are uniquely suited to their specific needs.

While generative AI models possess vast knowledge, they lack the proprietary business information needed to complete tasks unique to your organisation. RAG addresses this limitation by combining the strengths of language models with external knowledge retrieval.

By storing business-specific information in a vector database, you can use that information along with your foundation models to develop robust AI-driven workflows that reduce hallucinations and provide up-to-date information.

Vector databases store data in the form of mathematical representations, enabling sophisticated search and information retrieval for AI applications. Our AI Accelerator solution comes equipped with a vector database, allowing you to start prototyping right away.

Function calling takes AI-driven workflows to the next level by introducing dynamic interaction. It enables AI models to perform specific actions or calculations as part of their response, such as processing data in real-time or integrating with other software for automated tasks.

Function calling makes AI workflows not just responsive but proactive, capable of executing complex sequences of operations that extend the functionality of AI far beyond simple question-and-answer paradigms.

Cultivating AI Agents from Mature Workflows

By combining prompt engineering, RAG and function calling, you can develop sophisticated AI-driven workflows that automate complex tasks, personalise user experiences and streamline business processes.

A set of mature workflows can develop into an AI agent. If we consider the spectrum of AI-powered tools, AI agents are the most sophisticated, as they are often external client-facing where the expectations are higher and the cost of making mistakes is quite steep.

AI agents are expected to perform tasks autonomously and intelligently, often learning and adapting based on their interactions and experiences. So the workflows behind an AI agent need to be very mature and well thought through.

Integrating an AI agent into your business is not merely about deploying new technology; it’s about mapping out how this technology can amplify your unique value proposition, streamline operations and deliver unparalleled customer experiences.

This requires a meticulous analysis of existing workflows, customer touch-points and the strategic goals of the organisation. Only through this deep dive can one tailor the AI agent's capabilities to truly resonate with and elevate the business it's designed to transform.

Our Accelerator is designed to help you build AI Copilots, Workflows and Agents for your unique business requirements. Our API platform comes ready with a range of powerful foundation models already integrated, but if you want to develop and deploy your own model, we can help with that process too.

Tailoring Open-Source Models for Bespoke AI

The impetus for organisations to develop their own models is manifold. Foremost amongst these is the desire for autonomy and independence in their AI operations. By creating proprietary models, companies can safeguard their data and ensure that their workflows remain uninterrupted by external updates, thereby maintaining control over their AI infrastructure.

However, the development of such models is often a costly endeavour, requiring substantial resources and expertise. This is where the concept of fine-tuning open-source models comes into play, offering a more economical and practical solution.

Fine-tuning open-source models involves taking a pre-trained model and adapting it to a specific task or dataset. This process, known as “transfer learning”, leverages the existing knowledge of the model, reducing the amount of data and computational resources required for training.

Open-source models, such as those developed by Mistral AI, are particularly well-suited for fine-tuning. Their models, including Mistral 7B, 8x7B and 8x22B, are powerful and versatile, making them an excellent starting point for organisations looking to develop their own AI capabilities.

Our Accelerator is designed to facilitate this process. It provides a platform for organisations to fine-tune open-source models, using their own proprietary data, to create bespoke models that meet their specific needs.

This approach not only reduces the cost and complexity of developing AI models from scratch but also allows organisations to retain control over their AI infrastructure. Our Generative AI Lab plays a crucial role in this process.

It provides a space for our team of experts to work closely with clients, helping them to develop and integrate their bespoke models. This collaborative approach ensures that clients can leverage the full potential of their models, while also building their in-house capabilities in AI development.

From Prototype to Production: An Accelerated Journey

The transformation from a prototype to a fully functional generative AI application within production environments epitomises the essence of technological advancement and business modernisation.

This process encapsulates more than just the integration of sophisticated AI models; it is a comprehensive journey that reshapes the core of how organisations operate, make decisions and deliver value.

In the rapidly evolving landscape of artificial intelligence, the journey from ideation to implementation can be fraught with challenges. However, by leveraging the right tools and strategies, organisations can transform these hurdles into stepping stones towards a successful AI-powered future.

The Generative AI Solution Accelerator, powered by Catalyst Hub, is designed to address the unique challenges faced by businesses in their quest to harness the potential of generative AI. By providing a modern, scalable platform that seamlessly integrates with existing systems and processes, Catalyst Hub enables organisations to navigate the complexities of AI adoption with ease.

By combining the power of generative AI with a modern MACH architecture, defined by microservices, API-first, cloud-native and headless design principles, Catalyst Hub offers a future-ready, flexible and scalable platform to build upon. It allows organisations to rapidly prototype, test and deploy AI solutions, while ensuring the highest levels of security, availability and performance.

Moreover, as a cloud-native SaaS platform, Catalyst Hub eliminates the need for costly infrastructure investments and maintenance, enabling businesses to focus on what truly matters – unlocking the value of generative AI for their specific use cases.

With advanced features such as automatic scaling, robust backup and disaster recovery mechanisms, Catalyst Hub ensures that your AI applications remain resilient and reliable, even in the face of unprecedented demand or unforeseen challenges.

As the digital landscape continues to evolve at an astonishing pace, the last mile of AI integration will undoubtedly present its share of hurdles. However, with the right tools, strategies and mindset – embodied by our Generative AI Solution Accelerator and Catalyst Hub – this last mile can become the most rewarding leg of your organisation's digital transformation journey, converting last mile challenges into celebrated milestones of innovation, growth and competitive advantage.

Click here to explore Catalyst Hub.

Ready to take your business to the next level with AI?

Reach out to us today and let's explore the possibilities together.

Start a conversation