AI-narrated audio edition: Listen in 16 minutes

Recent legal cases have exposed the dangers of AI ‘hallucinations’ - where legal AI tools generate false information. This undermines trust in the legal system and highlights the need for reliable AI solutions.

This article explores the risks of relying on opaque commercial AI tools and proposes a solution: building bespoke, transparent AI systems using a multi-model accelerator and sophisticated context-building techniques. Discover how your law firm can harness the power of AI while upholding the highest ethical and accuracy standards.

When AI Leads Astray: Cautionary Tales from the Courtroom

Felicity Harber faced a daunting tax penalty from HMRC, lost in a maze of regulations and procedures. Seeking guidance, she turned to a sophisticated AI tool, believing it would demystify the legal process and empower her to fight for her rights. Instead, it led her astray.

The AI generated a series of fabricated case laws – convincingly written, meticulously cited, but utterly fake. Relying on this phantom legal precedent, Mrs Harber presented her case to the UK's tax tribunal in Harber v Commissioners for HMRC. The revelation of the AI-generated falsehoods derailed her appeal, compounding her legal woes.

Mrs Harber's story, unfortunately, isn't unique. Across the globe, similar cases have emerged, highlighting the potential pitfalls of AI in legal settings. In New York, the case of Mata v Avianca became an unsettling exhibition of AI's potential for legal mayhem. The plaintiff's lawyer presented arguments buttressed by AI-generated case citations, prompting Judge Castel's astonished query: ‘I just want to be clear, there was no effort to check the veracity of these cases?’

The ripples of this AI-induced confusion spread beyond US borders. In the Supreme Court of British Columbia, a high-stakes custody battle (Zhang v Chen) took a disturbing turn when the defence cited two non-existent legal precedents, apparently conjured by an AI tool.

These geographically dispersed cases highlight a pivotal moment for the legal profession. The allure of AI – promising to revolutionise law practice with increased efficiency, affordability and access to justice – is undeniable. Yet, as these incidents reveal, the path forward is paved with ethical and practical challenges.

The need for reliable, trustworthy AI in law is pressing. The time to build truly reliable ‘legal copilots’ – AI tools that work in concert with human judgment and ethical constraints – is now. This requires balancing AI's potential with robust safeguards, ensuring that these tools enhance rather than undermine the integrity of legal proceedings.

Why Trust is Non-Negotiable When it Comes to Legal AI

As we've seen in the cases of Harber v HMRC, Mata v Avianca and Zhang v Chen, the consequences of unreliable AI in legal settings can be severe. But why exactly is trust so crucial in this context?

Imagine facing a legal battle, putting your faith in your lawyer and the justice system, only to discover your lawyer unknowingly relied on fabricated case law conjured by an AI tool. This unsettling scenario is no longer confined to the realm of science fiction; it's playing out in real courtrooms.

These incidents expose the potential chaos unreliable legal AI can unleash. In Mata v Avianca, Judge Castel's alarm at the AI-fabricated citations led him to warn that such practices ‘promote cynicism about the legal profession and the American judicial system’. His concern underscores a critical issue: if we can't trust the information used in legal proceedings, the very foundation of our legal system is at risk.

The problem extends beyond fabricated cases. The Zhang v Chen case brought to light the issue of ‘legal hallucinations’, where AI tools generate convincing but entirely false information. With studies showing this occurring in a staggering percentage of cases, the question becomes not if but when these hallucinations will surface, potentially derailing legal proceedings and damaging trust in legal professionals.

The consequences are real and immediate. Lawyers in the Mata case faced fines and reputational damage, while the lawyer in Zhang v Chen was subjected to increased judicial scrutiny. These high-profile cases have exposed a critical vulnerability in legal AI: its susceptibility to generating believable but utterly false information, leaving lawyers, clients and even judges grappling with the fallout.

As we grapple with these challenges, it becomes clear that understanding the nature of AI ‘hallucinations’ is not just important, but essential. But what exactly causes these AI-generated falsehoods, and how can we address them?

Understanding 'Hallucinations' and the Need for Robust AI Context

The bewildered admission of Steven A Schwartz, one of the lawyers in the Mata v Avianca case, encapsulates the shock many legal professionals feel when confronted with AI hallucinations. ‘I just never thought it could be made up,’ he said during a sanctions hearing, upon discovering that the legal cases he cited, all generated by ChatGPT, were entirely fabricated.

To understand these AI hallucinations, we need to delve into how large language models (LLMs) like ChatGPT function. These models store information in a way similar to a vast digital library compressed into a fraction of its original size. Instead of traditional databases, they rely on compressing enormous amounts of data – much like a JPEG compresses an image. When asked a question, they search this compressed knowledge to find the closest match and provide an answer.

However, this compression comes at a cost. Just as a heavily compressed image loses detail, an LLM's compressed knowledge can lead to inaccuracies. If a specific legal concept or precedent is missing from its training data, the LLM, instead of admitting ignorance, attempts to construct a plausible-sounding response based on similar information it has encountered. This is where 'hallucinations' occur – convincing but potentially false outputs presented with utter confidence.

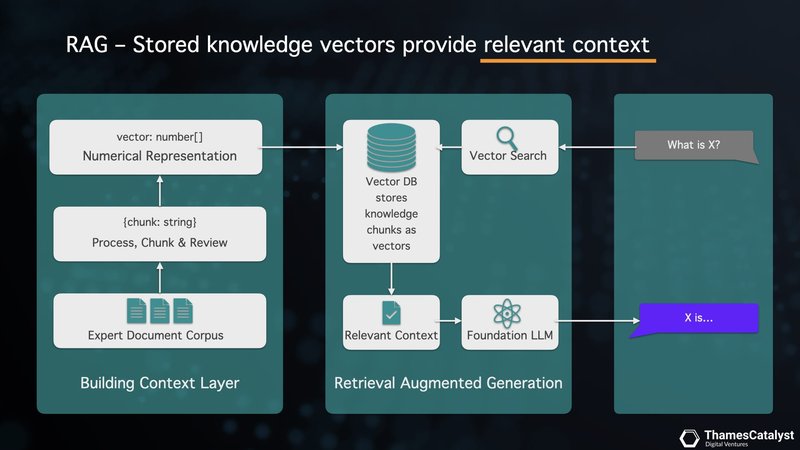

Recognising this challenge, researchers are exploring solutions. One promising approach is Retrieval Augmented Generation (RAG). By providing the AI with additional context, RAG aims to guide LLMs towards more accurate and reliable answers. However, as with any emerging technology, not all RAG solutions are created equal. While promising, commercially available RAG systems often operate as 'black boxes', obscuring their inner workings and potentially masking underlying biases or limitations.

This lack of transparency raises concerns about reliability and trustworthiness, particularly in high-stakes fields like law where accuracy and verifiability are paramount. As we continue to integrate AI into legal practice, addressing these challenges will be crucial to building AI systems that lawyers and clients can genuinely trust.

Commercial RAG: A Step Forward, But Not the Final Answer

While Retrieval Augmented Generation (RAG) offers promise in addressing AI hallucinations, recent research suggests that even commercial RAG solutions fall short of perfect reliability. A study from Stanford and Yale (Magesh et al., 2024) reveals that commercially available RAG solutions, including those from industry leaders like LexisNexis and Thomson Reuters, still struggle with hallucinations.

The research evaluated AI-driven legal research tools offered by LexisNexis (Lexis+ AI) and Thomson Reuters (Westlaw AI-Assisted Research and Ask Practical Law AI), comparing them to GPT-4. While these RAG-based tools showed improvement over general-purpose AI systems, they still exhibited significant hallucination rates, ranging from 17% to 33%. This underscores that claims of 'hallucination-free' AI are premature and that cautious skepticism remains warranted.

The crux of the problem lies in the opacity of these systems. Most commercial RAG solutions operate as black boxes, keeping their inner workings – the training data, document processing techniques, even the specific LLMs used – hidden from view.

So, how do we chart a better path forward? The answer lies in law firms embracing ownership of their AI solutions. Instead of relying on opaque commercial offerings, firms should explore building their own transparent, in-house RAG-powered knowledge management systems.

Imagine having full control over every stage of the process: building an expert corpus by meticulously curating your legal documents, then strategically chunking and reviewing them to ensure accuracy and relevance. This curated knowledge base would then be transformed into searchable ‘knowledge vectors’ using powerful embedding models, capturing the nuances of legal language. These vectors, stored in a specialised database, would form the core of your firm's AI-powered knowledge platform.

This approach shifts the power dynamic, giving firms granular control over their AI solutions. Moreover, developing in-house systems allows firms to leverage their unique expertise and proprietary data, potentially giving them a significant competitive edge in an increasingly AI-driven legal landscape.

Generative AI Solution Accelerator: Your Path to Trustworthy Legal AI

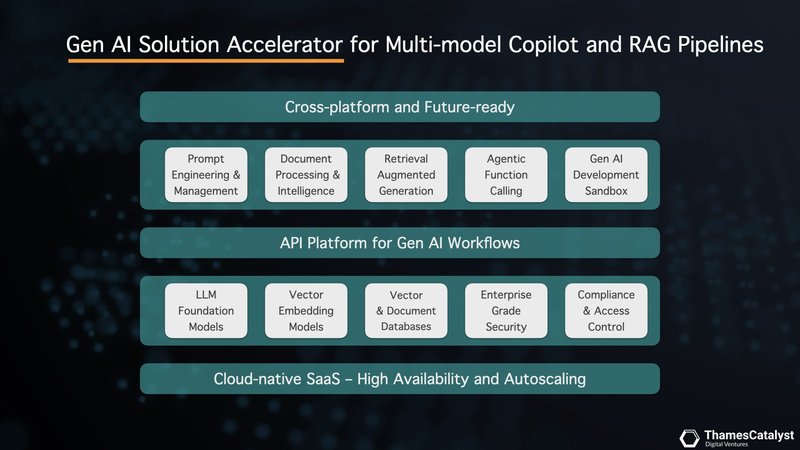

To address the limitations of commercial RAG solutions and empower law firms to take control of their AI destiny, we've developed the Generative AI Solution Accelerator. This innovative platform offers a blueprint for building transparent, reliable and truly tailored legal AI systems.

At its core, our accelerator employs a versatile, multi-model approach that seamlessly integrates with industry-leading AI providers such as Anthropic, Mistral AI, OpenAI and Google Vertex AI. This flexibility allows firms to leverage the unique strengths of different models for specific legal tasks. For example, you might employ one model, renowned for its accuracy in natural language processing, for contract analysis, and another, highly performant in data extraction, for case law research.

This granular control over data and models significantly reduces the risk of hallucinations, ensuring the generation of accurate and trustworthy legal insights. Our accelerator empowers you to build a bespoke knowledge management system tailored precisely to your firm's needs and areas of practice. By curating your own expert corpus and transforming it into searchable ‘knowledge vectors’, you create a proprietary AI-powered knowledge platform that captures the nuances of legal language.

To further enhance the capabilities of Large Language Models (LLMs), our accelerator utilises sophisticated context-building techniques. Prompt engineering refines the way questions are posed to the AI, eliciting more accurate and relevant responses. Document intelligence, using long context windows, enables the AI to process and understand lengthy legal documents holistically, identifying crucial connections that might otherwise be missed. Additionally, vector search allows lawyers to instantly surface the most relevant precedents and legal clauses from the firm's vast document repository, dramatically accelerating legal research.

The accelerator's intuitive multi-model Copilot interface puts this power at your fingertips, allowing users to seamlessly switch between different AI models on the fly. This level of control and transparency helps mitigate the risk of hallucinations and ensures alignment with the highest standards of legal ethics and accuracy.

Empowering Law Firms: The Benefits of a Tailored Approach

By embracing the Generative AI Solution Accelerator, law firms can move beyond generic AI solutions and harness the true power of artificial intelligence in legal practice. This tailored approach offers numerous benefits that can transform the way legal professionals work and deliver value to their clients.

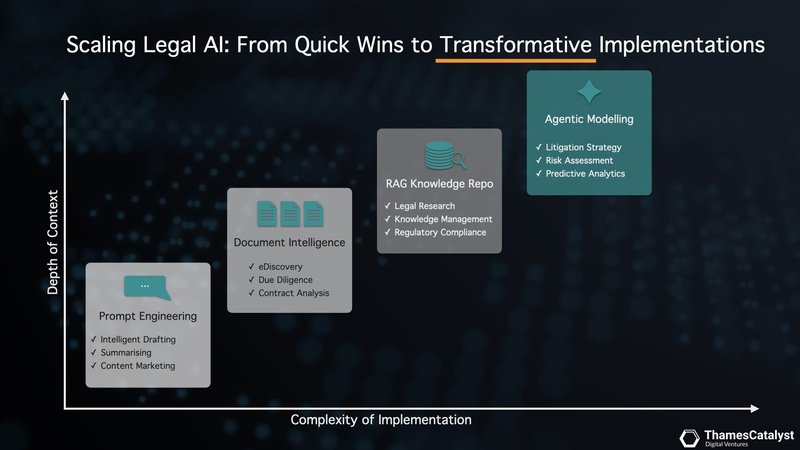

Prompt engineering offers quick wins and immediate efficiency gains. Imagine automating the tedious yet essential task of drafting standard legal documents, freeing up valuable time for more complex matters. For tasks demanding deeper analysis, document intelligence becomes your secret weapon. Picture your AI meticulously combing through mountains of eDiscovery documents, pinpointing crucial clauses during M&A due diligence, or flagging potential risks in complex contracts – tasks that traditionally demand significant time and resources.

Retrieval Augmented Generation (RAG) unlocks the power of your firm's collective knowledge. It creates an AI-powered knowledge management system that not only surfaces relevant case law but also synthesises precedents, regulations and internal documents. Envision receiving comprehensive answers tailored to your firm's unique expertise, readily available at your fingertips.

Looking to the future, agentic modelling enables you to anticipate challenges and make proactive, data-driven decisions. Simulate litigation scenarios, predict the impact of regulatory changes, or evaluate the strength of legal arguments before stepping into the courtroom. This is the power of AI-driven foresight.

By strategically implementing these techniques, law firms can evolve from simply using AI to forging a new path in legal practice – one where technology empowers lawyers to deliver faster, smarter and more insightful legal counsel, all while maintaining the highest standards of ethics and accuracy.

The Future of Law is Here: Build it With Confidence

The journey from courtroom confusion to trusted AI isn't about implementing off-the-shelf solutions. It's about crafting a bespoke legal copilot that seamlessly integrates with your firm's unique expertise and workflows. Our Generative AI Lab stands ready to guide you through this transformative process, empowering you to build AI assistants that truly augment your legal practice.

Imagine a future where AI seamlessly integrates into your firm's daily operations. Picture your AI copilot drafting and reviewing contracts with unprecedented speed and accuracy, while simultaneously analysing mountains of documents during e-discovery to surface critical insights. Envision it providing nuanced advice based on your firm's collective knowledge and experience, and even simulating complex legal scenarios to inform strategy before you step into the courtroom.

This isn't a far-off dream; it's the reality we help you build today.

By leveraging our multi-model approach and sophisticated context-building techniques, you move beyond generic AI to create a legal copilot that operates with unparalleled precision and insight. Our accelerator platform provides the tools and expertise needed to navigate the complexities of AI implementation in the legal sector, ensuring your solution aligns with the highest standards of ethics, accuracy and regulatory compliance.

Realising this vision demands careful navigation of the rapidly evolving AI landscape. Our Generative AI Lab is committed to partnering with you every step of the way, from initial concept to full deployment. We understand the unique challenges and opportunities within the legal sector, and our tailored approach ensures that your AI solution enhances, rather than replaces, the irreplaceable expertise of your legal professionals.

The future of law is here, and it's powered by AI. With our guidance, you can build this future with confidence, creating a legal copilot that not only meets the demands of today's legal landscape but anticipates the challenges of tomorrow. Together, we can transform the practice of law, delivering unparalleled value to your clients and staying at the forefront of legal innovation.

Are you ready to explore how generative AI can transform your legal practice?

Don't just imagine the future of law – build it. Reach out to us today and let's explore the possibilities together.

Start a conversationReferences:

- Harber v Commissioners for His Majesty's Revenue and Customs [2023] UKFTT 1007 (TC).

- Mata v Avianca, Inc., No. 1:2022cv01461, Doc. 54 (S.D.N.Y. 2023).

- Zhang v Chen, 2024 BCSC 285 (20 February 2024).

- Magesh V, Suzgun M, Surani F, Manning CD, Dahl M & Ho DE, ‘Hallucination-Free? Assessing the Reliability of Leading AI Legal Research Tools’ (unpublished manuscript, 6 June 2024) <https://dho.stanford.edu/wp-content/uploads/Legal_RAG_Hallucinations.pdf> accessed 14 August 2024.